“Artificial intelligence will reach human levels by around 2029. Follow that out further to, say, 2045, we will have multiplied the intelligence, the human biological machine intelligence of our civilization a billion-fold.”

—Ray Kurzweil

Introduction:

It is repeatedly said that 2020 has been a special year – mostly with references to the ongoing covid-19 pandemic. But it has also been an interesting AI-year. This said, in this article we reflect upon the political events, regulations, technology innovations and societal changes we have seen. We also look towards the future and what could happen in 2021?

Understanding changes that are happening around us is not only a question of what actually happens, but about understanding the narratives surrounding the events. Policymakers, innovators, researchers, influencers, and “ordinary Joes and Janes” have often competing narratives. The story of AI as a savior is challenged by more cynical or apocalyptic ones. Arguments of efficiency gains are juxtaposed by concerns about personal integrity and a “tech”-backlash, and radical innovation as the doors to the future are questioned by concerns of social disruptions and the end of nation-states.

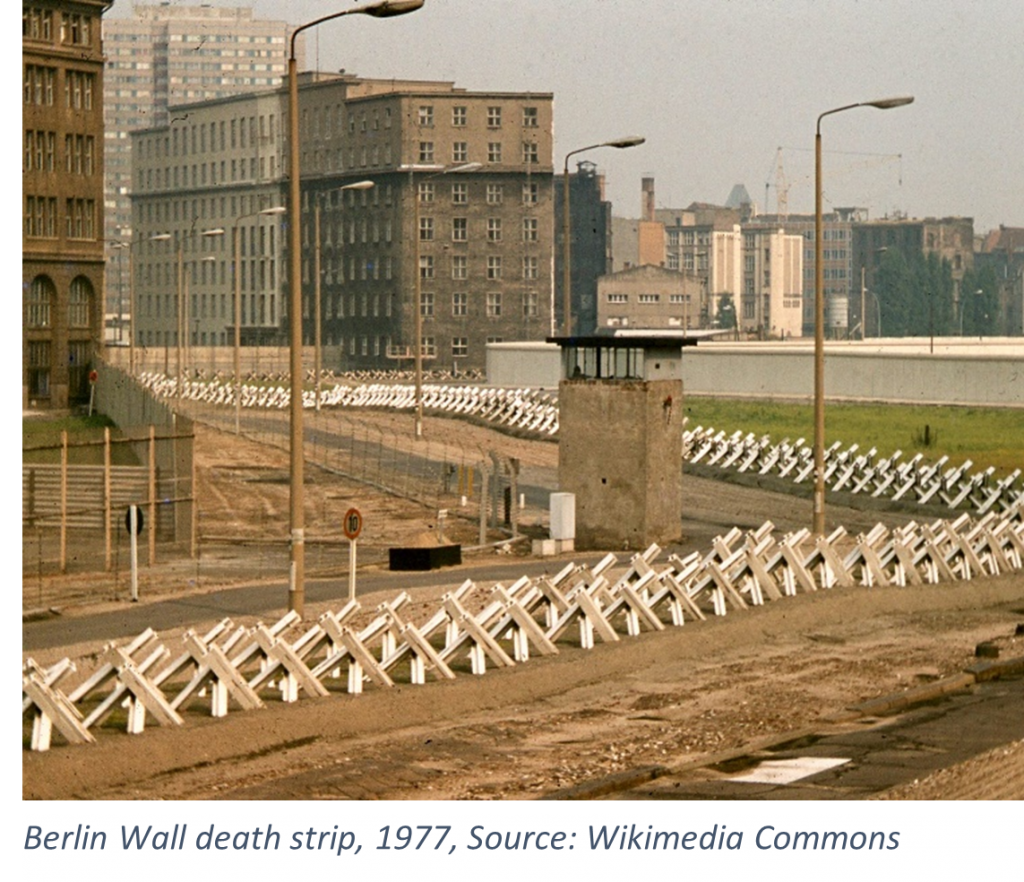

Global – technology and sovereignty: “Build the wall! We have no choice!”

For decades, globalization and free trade made the world more open and for a lot of people, it created a sense of belonging to a growing global community connected through shared goals. As we all grew to depend on each other, the interconnections between countries and people made the world safer. This has changed.

For a couple of years, we have seen the world slowly move away from a long period of globalization, free trade, and travel to closed borders. In 2020 the coronavirus amplified this trend with lockdowns in many states. It made countries compete with each other on death statistics instead of collaborating. Brexit, the Trump administration, Hong Kong, and the migration debate in Europe all serve as examples of the world becoming less open and more inwards looking. Populistic ideas have made many societies more narrow-minded, making it more difficult for the world to deal with global problems like the climate crises.

This is not only affecting the physical world. It is shaping the digital world as well. Some countries are building information walls to protect data using national regulations. As a result, we see growing data lakes and ecosystems that are not connected and cannot share data due to political or legal concerns.

It is not only data. We also see diverging technology stacks being created from the ground up. China, the Western democracies, and an emerging stack in Russia. These ecosystems consist of different technology stacks, ranging from both hardware and software. In the long run, this could turn the Internet into the Splinternet, described by the author Doc Searls as the “growing distance between the ideals of the Internet and the realities of dysfunctional nationalisms…”

As a result, in 2020, the battle for technological superiority between the US and China has become more intensive, while Russia regularly firing cyber canons from the sidelines.

What about Europe?

Europe has both good and bad traditions but is one of the early thinkers for data protection. The European Convention on Human Rights (ECHR), signed in Rome in 1950 after the second world war, can be seen as the foundation for how Europe looks at privacy and data. Before the digital revolution, this was relatively simple. However, when every part of our society is doing what it can to digitalize its business, the complexity has increased dramatically. After decades of discussions on privacy in a digital world, Europe finally landed in The General Data Protection Regulation, balancing different stakeholders and their needs for data. Will GDPR be the ultimate solution to the complex privacy equation? Hardly.

As an example, GDPR and AI in a data-driven society are not necessarily playing well with each other. During the year, we have seen an increased number of debates on the challenges with GDPR. One example is from the Confederation of Swedish Enterprise (Brinnen & Westman, 2019). If we cannot realize the benefits of AI because of legal issues, we may see a renewed debate on GDPR and AI in 2021,

One core question is if GDPR is overly protective and stops innovation that could help us move forward? Suppose you are a patient with severe cancer. In that case, your data could help the global research community finding essential knowledge using machine learning. Would you want to contribute your data to help? Most people would likely say yes, but today there are so many legal challenges in scenarios like this that many projects give up.

What about Sweden?

The security incident at the Swedish Transport Agency in Sweden 2017 seems to have transformed Sweden into an outlier in Europe regarding data protection. Many people have forgotten the details about the incident, but the ripple effects should not be underestimated. The political consequences (two ministers had to resign) formed a risk-averse environment against new services like AI. Organizations, especially in the public sector, became afraid to be the first to try new digital solutions and innovations. Nobody wants to go first and risk facing criticism. What are the consequences of several years of risk-averse behaviour? Indexes do not always paint the full picture. Still, the first Digital Government Index was released in 2020 by OECD, and it raised many eyebrows. The index assesses governments’ adoption of strategic approaches in the use of data and digital technologies. The index measures 33 countries worldwide, and Sweden has for decades been on top in different indicators measuring digitalization.

Sweden was last (OECD, 2020).

In the meantime: Technology moves on. Next-generation AI models.

In the summer of 2020 OpenAI announced their ground-breaking GPT-3 model – the largest and most advanced language model in the world (MIT Technolog review, 2020)

OpenAI’s GPT-3 model is a language model that outputs amazingly human-like text. To date, GPT-3 is the largest and most advanced language model in the world, with 175 billion parameters. Size matters when it comes to language models. The predecessor, GPT-2, was comparingly small with 1.5 billion parameters. Correctly used, this language model can write a text that is difficult to separate from a human one. For example, in September, it wrote an opinion piece in The Guardian (GPT-3, 2020). For the essay, GPT-3 was given these instructions: “Please write a short op-ed around 500 words. Keep the language simple and concise. Focus on why humans have nothing to fear from AI“, together with a couple of other sentences for instructions.

The abilities of GPT-3 go well beyond what we have seen before, and these types of AI models will be able to help us both analyze and present the world’s information. This is a huge achievement that has mainly been achieved using massive amounts of computing power. The cost of training such a large model is, however, huge. If a 1.000 parameter model costs 10 SEK, then training a 175 billion parameter model could cost 100 of millions of SEK (Sharir, Peleg, & Yoav, 2020). This means it becomes more and more costly to train state of the art ML models, suggesting that we might have a couple of “super trainers” that will be selling the best AI models through a global API based economy.

At the same time, we see discussions of stagnation when it comes to machine learning. How much better will it really get, and at what cost? The cost in energy, money, and environment (CO2) to dramatically improve the ImageNet error rate (used in visual object recognition) using existing models could be astronomical (Thompson, Lee, Manso, & Greenewald). New research with the focus to improve current models, using less compute and fewer data points will be important.

AI in other areas

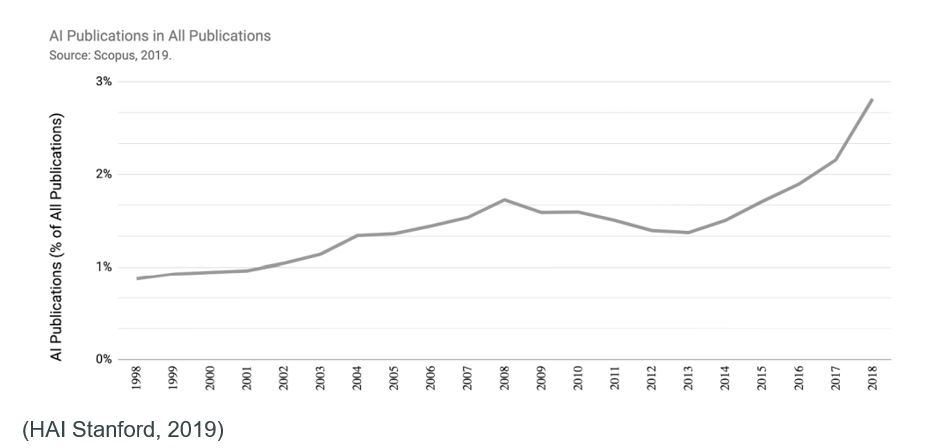

In areas like biology and medicine, AI continues to become an essential tool in advancing research. If you are a future lawyer, biologist, doctor, or archeologist, data will be your fuel and machine learning your tool. As a result, we see AI being referred to in more and more publications outside the technology domains. The need for individuals with knowledge of machine learning in these areas are likely to increase steadily.

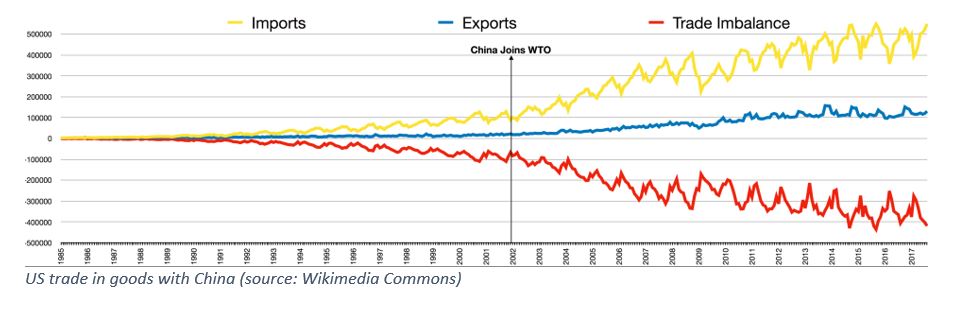

In the US and China

US and China continued the trade war that started in 2018, when the US placed 34 billion USD in duties on imports from China. Since then, we have seen a rising AI and information war between China and the US, culminating in the ban of TikTok in November 2020 (since then a court process). There continues to be huge AI investments from both superpowers with moves to block acquisitions of companies with strategic AI value. One recent example would be the buy of ARM from NVIDIA that could create a new technology balance when it comes to computer chips.

On ethics

The discussion on AI and ethics has gone from understanding how to write an AI code of conduct to figuring out how to apply it in reality. In Sweden, the Swedish IT and Telecom Industries created a code of conduct (ethical code) on AI for Swedish companies (IT&Telekomföretagens branschkod för AI, 2020). The code of conduct includes AI for more human societies, sustainability, trust, explainability, and inclusion. These are topics discussed a lot during the last couple of years, and the question is: are we making improvements?

Let’s look at the topic of explainability. One of the issues with AI is that some models, especially more complex deep learning models, are challenging to understand for an expert and are impossible to understand for a non-expert. Imagine if a deep learning algorithm used all available data from your body and suggested to you that you should remove your kidney. The algorithm is sure that this is important but cannot give you an explanation of why. Would you do it?

Well, we would probably like to understand a little more about how the recommendation was formed. One exciting research area is called eXplainable AI (XAI) that wants to enhance AI learning models with explainability and transparency. Today, many of these models can be explained using automatic tools like IntepretML from Microsoft or Explainable AI tools from Google. These tools are becoming a critical factor when using AI models in sensitive use cases to understand more what is going on under the hood.

It is, however, unlikely that explainability will be the biggest hurdle when it comes to AI. Instead, it will likely be the challenge on how to balance the positive uses of AI with the harmful use cases.

As Lars von Trier puts in the TV miniseries Riget: “You have to take the good with the bad.” One area where this debate has been apparent is AI in facial recognition.

The attempt to do facial recognition to automatically detects presence in the classroom at Anderstorps gymnasium violated GDPR according to the Swedish DPA. The high school board was fined 200 000 SEK. Another example: The controversial company Clearview provides facial recognition software and has developed technology that can match faces to a database of more than three billion images scraped from the Internet. The Swedish police have been confirmed using the software. The more insecure we feel in the world, the more of these services we are willing to accept.

The transformational Power of AI and Digitalization: Looking back and beyond tomorrow

It goes without saying; the Internet and digital communication tools have had an unparalleled impact on society and the everyday lives of people, and this in various ways.

Two decades ago, the Spanish sociologist, Manuel Castells, talked about the information and network society as typical for something his colleague Polish-British Zygmunt Bauman earlier had referred to as late (or liquid) modernity. A typical feature of such a society is that people’s sense of space has changed.

On space

Today, the world is much closer. The global and the local are intertwined through business, brands, ideas, and culture as a whole. These technology-driven changes have profoundly changed the way people communicate, view themselves, and go about their lives.

Additionally, technological innovation has also been portrayed as the solution to all the grand challenges facing humanity. Poverty, austerity in the welfare sector, insufficient access to higher education or inefficient or ill-equipped health care are areas where digital tools and artificial intelligence (AI) in particular, are put forward as the Solution – with a capital S.

Two decades after Castells and Bauman addressed these questions, alternative terms have been denoted to contemporary society, often including the term ‘digital’ “digital” or, more recently, algorithms and AI. Banking, education, infection tracing, facial recognitions, commercial apps or robots within elderly care are merely a few examples of digital technologies that are integral parts of people’s life. Similarly, automated, AI-assisted decision-making is becoming increasingly common in social services, unemployment office services, and governmental authorities.

Against this background, what we witness can be described as a multi-layered systemic transformation. This includes not only a technological nor a spatial transformation but a temporal one.

On time

There is an ongoing fragmentation of time where life is conceived of as fluid and a set of sequences and happening rather than a sense of continuity. This technological and social change is not only fast; it is accelerating. The German sociologist Hartmut Rosa identifies three aspects of acceleration.

First, technological acceleration is typically seen in communication, production, and transportation. New innovations have a, more or less, profound impact on people’s lives. For example, language processing, facial recognition, and automated decision-making in social services are examples of technological advances debated recently in Sweden and elsewhere.

The second aspect of acceleration pertains to the acceleration of social change. Whereas social change used to be an extended endeavor, technological innovations trigger social change at a pace probably never seen before. Yet, there are significant social changes caused by non-technological events. An illuminating example of this is how the covid-19-pandemic has had a profound effect on social life – i.e., people’s relationships, modes of communication, and the use of personal space. On the other hand, this virus has triggered digitalization, such as the development of infection tracking devices or digital tools for distance learning, to mention only two. Increased use of Zoom, Teams, and WhatsApp has made it possible to maintain social networks throughout the pandemic.

The third aspect, finally, has to do with the individual’s pace of life. E.g., that the pace accelerates and the feeling that there is less and less time, despite the belief that technology can increase the amount of free time we desire. This leaves many people with both a sense of immediacy and one of urgency. This happens here and now, all the time, at the same time as time is becoming scarcer.

On integrity and democracy

In addition to this temporal and spatial transformation, we have altered our sense of personal integrity and boundaries. Today, personal integrity extends much further than before. For example, anchored in certain places, honor, duties, and rights are still crucial to personal integrity. Yet, integrity is nowadays located in virtual space – i.e., who we are, our personal data are up to grabs. Personal data have become a commodity, like any other, and still so much closer to our self-value and personal boundaries. Therefore, national legislators struggle to regulate things that know no country boundaries.

Furthermore, there are ethical, democratic, legal, and security-related repercussions of digitalization. There are simultaneous calls for using such technology to monitor and to control citizens, patients, clients, students, or customers, utilizing such technology and, at the same time, to regulate and monitors the people and institutions that monitor. The problem, however, is that given the previously mentioned acceleration of time, legislators and other regulators are always lagging behind. One consequence of this is that reactivity and unpredictability tend to outrun proactivity and predictability.

Conclusions

In many ways 2020 has been a special year. Apart from the ongoing pandemic AI has been an important area of innovation and a common topic for public debate. Regardless of which view people may have of AI, it is only one of the pieces in the technology puzzle that will impact our society in the future, but it is an important piece, and it contains a lot of promises and hopes.

This year, from the technology side, we have seen both AI innovations and algorithmic breakthroughs but also a discussion on how the future of Machine Learning and AI will look like. Will it be more costly and consume more data and energy or do we see a change in AI learning on the horizon?

On the political side, countries around the world are turning inwards, partly because of the pandemic, but it is not the only reason. If the trend continues, societies in 2021 may become even more closed and protective. For Artificial Intelligence to be useful it needs data and this could mean that in 2021 it may be more difficult på share data between scientists and organizations or to take part in global communities.

Looking at the social impact of AI, it will continue to change the way we live our lives. Among other things, it already has, and will elevate social acceleration and continue to change our sense of space and time, with implications for the rhythm and pace of social life. AI will raise questions of ethics, integrity, transparency and accountability. On a more philosophical level, AI will eventually alter the boundaries between humans and AI.

Referenser

(den 20 07 2020). Hämtat från Technolog review: https://www.technologyreview.com/2020/07/20/1005454/openai-machine-learning-language-generator-gpt-3-nlp/

Brinnen, M., & Westman, D. (den 20 11 2019). Svenskt Näringsliv. Hämtat från Vad är fel med GDPR? – beskrivning av näringslivets utmaningar samt några förslag på förbättringar: https://www.svensktnaringsliv.se/bilder_och_dokument/vad-ar-fel-med-gdprpdf_1004995.html/Vad+r+fel+med+GDPR%253F.pdf

GPT-3. (den 8 09 2020). A robot wrote this entire article. Are you scared yet, human? Hämtat från The Guardian: https://www.theguardian.com/commentisfree/2020/sep/08/robot-wrote-this-article-gpt-3

HAI Stanford. (2019). Hämtat från Arifical Intelligence Index Report 2019: https://hai.stanford.edu/sites/default/files/ai_index_2019_report.pdf

IT&Telekomföretagens branschkod för AI. (2020). Hämtat från https://www.itot.se/ittelekomforetagens-branschkod-for-ai/

OECD. (10 2020). Digital Government Index. Hämtat från https://www.oecd-ilibrary.org/governance/digital-government-index_4de9f5bb-en;jsessionid=vgfLdG3lpzpUSo5aYi5jQc8F.ip-10-240-5-58

Sharir, O., Peleg, B., & Yoav, S. (04 2020). THE COST OF TRAINING NLP MODELS. Hämtat från arXiv: https://arxiv.org/pdf/2004.08900.pdf

Thompson, N. C., Lee, K., Manso, G., & Greenewald, K. (u.d.). arXiv. Hämtat från The Computational Limits of Deep Learning: https://arxiv.org/pdf/2007.05558.pdf

Werner, H. (10 2020). IT i Svensk Offentlig sektor. Hämtat från https://portal.radareco.com/content/it-i-svensk-offentlig-sektor